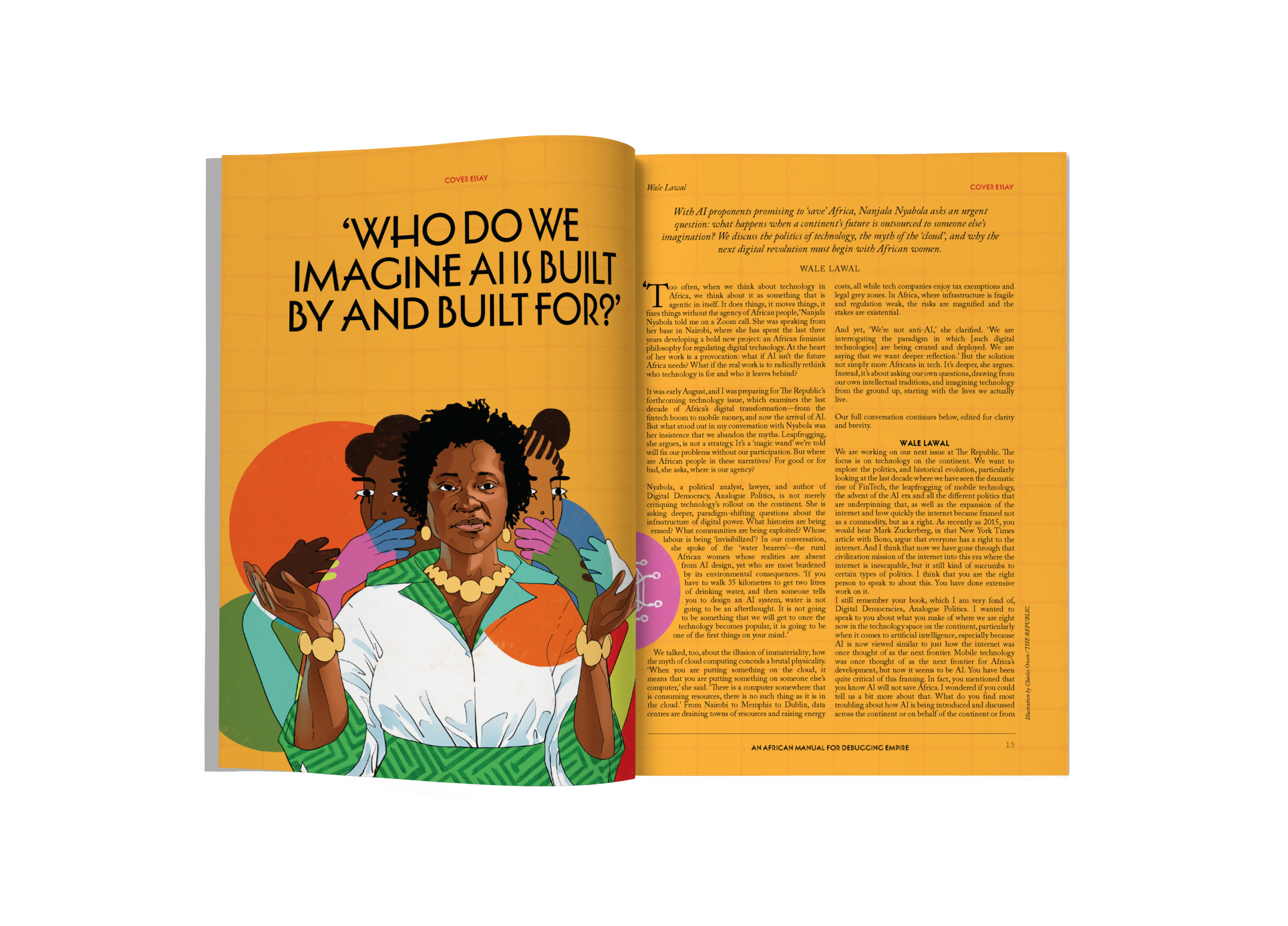

Illustration by Charles Owen / THE REPUBLIC.

THE republic interviews

‘Who Do We Imagine AI Is Built By and Built For?’

Illustration by Charles Owen / THE REPUBLIC.

THE republic interviews

‘Who Do We Imagine AI Is Built By and Built For?’

Too often, when we think about technology in Africa, we think about it as something that is agentic in itself. It does things, it moves things, it fixes things without the agency of African people,’ Nanjala Nyabola told me on a Zoom call. She was speaking from her base in Nairobi, where she has spent the last three years developing a bold new project: an African feminist philosophy for regulating digital technology. At the heart of her work is a provocation: what if AI isn’t the future Africa needs? What if the real work is to radically rethink who technology is for and who it leaves behind?

It was early August, and I was preparing for The Republic’s forthcoming technology issue, which examines the last decade of Africa’s digital transformation—from the fintech boom to mobile money, and now the arrival of AI. But what stood out in my conversation with Nyabola was her insistence that we abandon the myths. Leapfrogging, she argues, is not a strategy. It’s a ‘magic wand’ we’re told will fix our problems without our participation. But where are African people in these narratives? For good or for bad, she asks, where is our agency?

Nyabola, a political analyst, lawyer, and author of Digital Democracy, Analogue Politics, is not merely critiquing technology’s rollout on the continent. She is asking deeper, paradigm-shifting questions about the infrastructure of digital power. What histories are being erased? What communities are being exploited? Whose labour is being ‘invisibilized’? In our conversation, she spoke of the ‘water bearers’—the rural African women whose realities are absent from AI design, yet who are most burdened by its environmental consequences. ‘If you have to walk 35 kilometres to get two litres of drinking water, and then someone tells you to design an AI system, water is not going to be an afterthought. It is not going to be something that we will get to once the technology becomes popular, it is going to be one of the first things on your mind.’

We talked, too, about the illusion of immateriality; how the myth of cloud computing conceals a brutal physicality. ‘When you are putting something on the cloud, it means that you are putting something on someone else’s computer,’ she said. ‘There is a computer somewhere that is consuming resources, there is no such thing as it is in the cloud.’ From Nairobi to Memphis to Dublin, data centres are draining towns of resources and raising energy costs, all while tech companies enjoy tax exemptions and legal grey zones. In Africa, where infrastructure is fragile and regulation weak, the risks are magnified, and the stakes are existential.

And yet, ‘We’re not anti-AI,’ she clarified. ‘We are interrogating the paradigm in which [such digital technologies] are being created and deployed. We are saying that we want deeper reflection.’ But the solution not simply more Africans in tech. It’s deeper, she argues. Instead, it’s about asking our own questions, drawing from our own intellectual traditions, and imagining technology from the ground up, starting with the lives we actually live.

Our full conversation continues below, edited for clarity and brevity.

WALE LAWAL

We are working on our next issue at The Republic. The focus is on technology on the continent. We want to explore the politics, and historical evolution, particularly looking at the last decade where we have seen the dramatic rise of FinTech, the leapfrogging of mobile technology, the advent of the AI era and all the different politics that are underpinning that, as well as the expansion of the internet and how quickly the internet became framed not as a commodity, but as a right. As recently as 2015, you would hear Mark Zuckerberg, in that New York Times article with Bono, argue that everyone has a right to the internet. And I think that now we have gone through that civilization mission of the internet into this era where the internet is inescapable, but it still kind of succumbs to certain types of politics. I think that you are the right person to speak to about this. You have done extensive work on it.

I still remember your book, which I am very fond of, Digital Democracies, Analogue Politics. I wanted to speak to you about what you make of where we are right now in the technology space on the continent, particularly when it comes to artificial intelligence, especially because AI is now viewed similar to just how the internet was once thought of as the next frontier. Mobile technology was once thought of as the next frontier for Africa’s development, but now it seems to be AI. You have been quite critical of this framing. In fact, you mentioned that you know AI will not save Africa. I wondered if you could tell us a bit more about that. What do you find most troubling about how AI is being introduced and discussed across the continent or on behalf of the continent or from the context of the continent?

NANJALA NYABOLA

The most important thing to say from the outset is that there is insufficient interrogation of the concepts that we use to discuss Africa. That is the simplest entry point, because I think sometimes, we are given to oversimplification. We are given to seeking out convenient narratives. We are given to importing concepts wholesale without unpacking well, what does it mean in this context? What is the history and politics? And that creates a very murky environment in which we become really vulnerable to perpetrating very harmful and incomplete narratives. With that background in mind, I think one of the biggest challenges when thinking about digital technologies in Africa is that it is always applied in an aspirational sense that is abstracted from the reality of the people who live on the continent. And Africa, as an idea, is sort of wielded in a way that is devoid of humans.

When you think about concepts like leapfrogging, for example, what you are saying is that the insertion of technology is going to allow societies surmount a lot of complicated social, economic and political challenges without the participation of people. It is not people who are going to make those changes. It is not choices that people make. It is not agency. It is this magic wand that is going to be inserted, it is going to fix all the problems, and everything is going to be okay. So, it is in the imaginary. And this is the concept of a socio-technical imaginary comes from Jean and John Comaroff, who are a pair of anthropologists, and the idea of a socio-technical imaginary is that we think about technology as being embedded in a social and a technical context, that it is not something that just exists in an ether but actually behaves or is shaped by the choices that individuals take. But too often, when we think about technology in Africa, we think about it as something that is agentic in itself. It does things, it moves things, it fixes things without the agency of African people. And this has been a long running critique of mine when we think about social media, mobile money, and AI. Where are the African people in this narrative for good or for bad? Where are the realities of Africans, the political and social contexts. Where are the realities of policymakers in Africa and the choices that they make or fail to make in these contexts? So, when you think about AI and this whole narrative about how AI is going to fix everything, it confers a certain amount of agency on what is ultimately a tool and then it deprives that agency from the people who are supposed to use the tool for it to be responsive to their context. It is not that we are going to find ways of shaping this tool in a sense that is meaningful to us, or that is consistent with our politics in our context, or whatever it is that the tool is going to be inserted and then magically, everything is going to be fixed. And it is kind of like evading the complicated questions of history and the complicated questions of impact. And for me, the complication with AI is beyond the shiny things that are happening with the surface. Have we thought carefully about the environmental impact, the political impact, the impact on labour and labour rights, the consumption of water in water-scarce contexts, power, the racial dynamics that are embedded in how AI is shaped, the job losses that people are facing. There are so many things that are bigger than just ‘let’s throw AI at it and everything’s going to be okay.’ And I think that is not so much for me, a critic, criticism that I level solely on AI, but it is a criticism that I level against all of these narratives and discourses about tech, techno-determinism. This idea that on its own, without the agency, creativity and interventions of human beings, somehow the application of digital technology is going to resolve all of these complex problems that people have been grappling with for generations.

WALE LAWAL

I think that is a really brilliant way for us to start. I wanted to ask a follow up to that, because I think it is important that the audience understands just where you are coming to this argument from. For the last three years, you have been working on developing an African feminist philosophy for the regulation of digital technologies. Can you tell us a bit more about that and what potentially it would mean for us to reimagine AI from the standpoint of African feminism.

NANJALA NYABOLA

I have been researching digital technologies for the better part of the last ten to twelve years. What I wanted to do was to take some time to resolve some of the questions about why I was seeing certain things that were so difficult to communicate with other people. So, when I wrote Digital Democracy, I was thinking about social media specifically and how people’s agency in Kenya was creating all of these positives and negatives. I was also thinking about its intersection with capitalism, its intersections with the preceding historical political context and why the same technology applied in a different context would yield such different outcomes. And there were some questions that were left over; mainly the question of why I was seeing these things that seemed so clear and so obvious to me, but so much of the mainstream—the people that I was talking to in tech, more broadly—were like, ‘Oh, I hadn’t thought about that. I hadn’t seen the world that way.’ And some of the questions really came from my experiences of being an African woman who grew up in an African city. It is a very specific context. I didn’t grow up in a village or in a rural context. So, there is this interaction between history and technology that cannot be generalized throughout the entire continent.

But at the same time, I do know what it is like to grow up in a water-scarce context. We had water issues throughout my childhood in Nairobi. And so, for me, there were questions that were so obvious to me precisely because of that lived experience. And what I wanted to do was to expand this thinking and to really lean into this thinking and saying, ‘Well, where is the genesis of this disparity?’ And one of the things that you realize when you study philosophy is that there is a person that we imagine who is at the centre of the way in which we make sense of the world. In law, for example, there is this whole idea of the reasonable man standard. In criminal law, the standard for a lot of crimes is, would this be considered a reasonable action by a reasonable man? And you see it in cases of revenge, murder, assault and things like that. When juries are asked to grapple with what sentence should we give a person. Is this even a crime? There is a person that they have imagined, a reasonable man who is conferred with a specific capacity for thought and whatever, and then that is the person that they measure the standard to.

And within criminal law, there has been a lot of grappling in the common law about this reasonable man standard, because, for example, in cases of domestic violence, usually women are harshly penalized because the reasonable man is a man who does not understand why women don’t leave abusive contexts. And you know, a lot of research has demonstrated the reason why women don’t leave is because they are the most vulnerable to excessive violence at the moment that they declare an intention to leave. Because I have a legal background, I took this philosophical foundation and I started thinking about tech. Well, who was the person at the centre of our imagination about what tech is? And you will find, for the most part, the vast majority of the digital technologies (and that includes AI and social media) are built with the assumption that there is a white, able-bodied, middle-class, upper-class man at the centre. And this assumption is born out when we think about what constitutes harassment, what constitutes violence, what standard of action is deemed high enough for it to justify intervention by the people who own the companies.

Women have been harassed on social media for years and it has proven almost impossible to get these companies to take the harassment and the violence that women experience online seriously. When you think about deep fakes, for example. I saw narratives about deep fake technology and my very first thought was, ‘This is going to be used to harass women.’ ‘This is going to be used to hurt women.’ That thinking is not coming out of obstructions, because as women, we have seen it. We have seen revenge porn being distributed as a way of hurting women, whether they are politicians or just ordinary women who broke up with someone because they didn’t want to be in a relationship anymore. The harm that we talk about in relation to tech, doxing and swatting, were first levelled against women because they wanted to play video games. This whole Gamergate scenario started because women wanted to play video games and wanted to talk about video games. So, it was this disparity that I really wanted to interrogate, but to go deeper and think about this: when we build AI, when we tout AI, when we celebrate AI, who are we imagining is at the centre of that imaginary that is created? Who do we imagine AI is built by and built for? And it started to become very clear that there is a disparity.

I have so many examples because I have spent a lot of time thinking about this. But ultimately, if an African rural woman was designing AI, was deploying AI, what would it look like? Leaning into that question and turning to the huge volume of work that African women have been doing over the last 60 to 70 years to theorize and map the world and to think about power and to think about society. That is kind of the basis of the research I am doing right now. And it is a long story, but like the simplest conclusion is that there are ways of thinking about the world that emerge because of our position in the world and there are concerns that people have that are not reflected in how AI is being developed and deployed. I have already alluded to some of them, water, environmental impact, labour, whose labour counts as labour, where will the money go and how will the proceeds be distributed. These are all questions that African feminists have been grappling with for the last 60 to 70 years. And there is this body of work, and my task was to take that body of work and apply it in the context of AI regulation, and present that as a as a theory, a framework for thinking about regulation.

shop the republic

-

‘The Empire Hacks Back’ by Olalekan Jeyifous by Olalekan Jeyifous

₦70,000.00 – ₦75,000.00Price range: ₦70,000.00 through ₦75,000.00 -

‘Make the World Burn Again’ by Edel Rodriguez by Edel Rodriguez

₦70,000.00 – ₦75,000.00Price range: ₦70,000.00 through ₦75,000.00 -

‘Nigerian Theatre’ Print by Shalom Ojo

₦150,000.00 -

‘Natural Synthesis’ Print by Diana Ejaita

₦70,000.00 – ₦75,000.00Price range: ₦70,000.00 through ₦75,000.00

WALE LAWAL

What you said about law, philosophy and this idea of the reasonable man reminded me of how I studied economics. And in economics, we have this idea of the rational welfare maximizing man who is a white man, white middle class. He is from the West and cannot understand why people don’t seek to maximize wealth in the way that an American capitalist will. They don’t understand altruism. Even this idea of the collective; there is this tension between individualism and collectivism in economics, and this idea that economists have about collectivist societies being less advanced. And the same economists are always complaining about why their models don’t work for non-Western societies. But it is because you are creating these models with a white man as your frame of reference and you are trying to apply that to an economy that does not even recognize those aspects. So, it is very interesting.

NANJALA NYABOLA

It is not just so much about them applying them to other contexts and it not working. It is also about them not applying it to themselves. What I am really trying to emphasize in this research is that just because these ideas emanate from African women doesn’t mean that they are only applicable to African women. Additionally, a secondary question for me was why wasn’t revenge porn an obvious question when people were building AI? Why wasn’t that an obvious concern? Because that was the first thing every woman I know thought about. They were like, ‘This is going to be used to hurt women, right?’ The European Union has a policy on deep fakes that recognizes that women in politics, particularly in Europe, but all over the world too, have been vulnerable to the use of deep fakes to discredit them as political actors. As a result, they have this legislation that is supposed to end this whole policy framework that is supposed to prohibit and curb the use of deep fakes. But then, when you go to the EU AI policy, deep fakes are not considered as the highest level of risk. It is considered to be… There are about four levels of risk, and I can’t remember the exact language, but it is not considered as the highest level of risk.

Also, I was looking at that disparity and I thought, well, is it because the people who are at the centre of driving the deep fake policy are different from the people who are the centre of driving the EU AI policy? Who do we believe is a natural subject of the different policies that are being made? One of the things I am trying to emphasize with this research is that the narrowing down of sources of knowledge we have about technology is resulting in these outcomes. What we want to do is to open that up again and have African feminism as a source. Let’s start from the fundamental questions and diversify the sources of knowledge that we have about the world and about how technology should be governed. Because here is this body of work that has been challenging economics, politics, water allocation and labour. African women have been doing this for so many years. So, what would it look like to broaden the sources of knowledge in general and not just say, well, we have to tweak the existing model so that African women are included? Does that make sense?

WALE LAWAL

Absolutely, it makes total sense. And I think it is something that I have seen you write about; that this is not something that is solved where the end goal is not just having more representation or diversity. That is even the beginning of the work that then needs to be done. We are going to talk a bit more about that, but in the meantime, we have talked about the philosophy, or the philosophical questions and tensions around AI. I wanted to ask a question more about its physical ramification, its physical consequences. There is this whole idea that AI is just something that lives on your phone. It is like a ghost in the machine. It is light or it exists in the cloud. But I think what people often forget is that the machinery or the infrastructure behind AI is massive. And it is not just massive; it is like a hungry beast in terms of the amount of energy and (physical) resources it requires. We live in this world where everybody says everything is now virtual. AI is going to accelerate that. We are moving beyond brick-and-mortar businesses. Everything can be done online. But we don’t seem to be having a conversation about just the infrastructural and environmental demands AI is placing on our societies. I wondered if you could talk a bit more about that, just in terms of what has come up in your own research; this idea that AI is weightless, it is in the cloud, it is immaterial. However, it does have material consequences. I wondered what you thought were some of the risks and challenges behind this myth of weightlessness, particularly for African communities.

NANJALA NYABOLA

It is so obvious to me, but I know that it is very controversial to a lot of people. One of the most poignant observations about the materiality of tech that I have ever heard; I can’t remember who said this, but I heard it in the context of a feminist convening on tech, and someone said, ‘There is no such thing as the cloud.’ When you are putting something on the cloud, it means that you are putting something on someone else’s computer. There is a computer somewhere that is consuming resources, there is no such thing as it is in the cloud. For me, that sentence has a lot of semiotic value because it summarizes the intellectual sleight of hand that is at the heart of how we talk about digital technologies; about how we govern them, that we need for them to be immaterial in order for the incongruence of the policy space to survive. Because once they become material, then it becomes absurd that they are getting away with not being taxed and not being legislated and regulated. The way Coca-Cola is a multinational company and one of the stories Coca-Cola always gets levelled with is that they take up portable drinking water and use it to make soda and they put it in plastic bottles, and those plastic bottles go ahead and choke rivers. So, we complain about that because there is a materiality to the Coke bottle. And Coca-Cola gets taxed accordingly, right? There is a tax on sugar, and you can say this about any soda brand. That is not a specific criticism of Coca Cola, but it is the fact that because we can hold the bottles of soda and we drink the bottles of soda, we are able to ascribe a certain value to the bottle of soda and justify the policy context in which sodas are regulated.

The intellectual sleight of hand that tells us that digital technologies exist in the cloud, that they don’t have a material format or material consequences, is what justifies the super profits. It is what justifies the tech-over-everything approach to regulation and governance. It is what justifies this unchecked consumption of natural resources. But the reality of it is that AI is material. There is an infrastructure. There are cables, supercomputers, cooling centres, and these things consume physical space. They have to buy land and build the processing centres. Then those computers get hot because of the concentration of energy. Those computers must be cooled down and they cannot be cooled down using salt water. They must be cooled down using fresh water. They are usually built close to towns where they are able to take labour and escape environmental regulation. So, the energy they use to run these super computers consume hydrocarbons. They produce hydrocarbons into the environment. There is something happening somewhere. Just because we don’t see it, and because our policymakers don’t want to regulate or discuss it, doesn’t mean it is not happening. And with AI, all of this has become turbo charged.

In Memphis, like many American cities, there is a lot of racial disparity. X’s AI put their largest supercomputer in a predominantly Black neighbourhood in Memphis, and although it has been less than two years, it has spiked in respiratory illness. The (drinking) water is polluted. People are paying more for electricity because of the electricity demands that X’s AI, Grok, supercomputers are putting on the general electricity infrastructure. The costs of that are being passed on to consumers in Ireland. It is estimated that Microsoft, but I think also Amazon, data processing centres consume the same amount of electricity as every single household in Ireland put together. And that cost is being passed on to the consumer, because of the demand on the grid and the maintenance of the grid and because we want to attract these companies as they are so good for our economy, the cost of it is being passed on to the consumer so electricity becomes more expensive.

I was reading a study this morning about how this July was the hottest July in history in the world. The previous July was the hottest July in history in the world. The previous year on year for the last five or six years has been the hottest year ever in history in countries like Spain, where there is a proliferation of data centres. But there is also this water scarcity. There is also this drought cycle that has become an issue. Small towns are running out of water; they are literally running out of water because the water is being diverted to cooldown the data centres. This is happening in the largest economies in the world. What does that look like in Africa? Those are the kinds of questions that I think had to be put on the table. I go back to why I put the African woman, particularly the liberal African woman at the centre of my theorizing. This is because if you have to walk 35 kilometres to get two litres of drinking water, and then someone tells you to design an AI system, water is not going to be an afterthought. It is not going to be something that we will get to once the technology becomes popular, it is going to be one of the first things on your mind. How do we make this water efficient so that we don’t build a world in which more people have to walk 35, 50, 100 kilometres in search of two weeks’ worth of drinking water. These are the kind of questions that I want. That labour that they do—that walking is labour, that carrying of the water on your back is labour. In my research, I call it the water bearers. In the context of AI, who are the water bearers, who are the people doing the invisible labour that makes these resource-hungry systems possible? And then what will that mean for Africa if this becomes the norm? For me, that immateriality of AI and digital technology is really about this cognitive justification that policymakers and the people who build tech need to justify all of the exceptions that make the current socio-economic model of tech deployment possible.

WALE LAWAL

This is all very interesting to me because on the one hand, we are having all of these conversations about how we now have the red alert; climate damages are irreversible. They are going to happen. We all need to brace up for the impacts of climate change. Then you have this almost unchecked capitalist greed that is now affecting the environment even more. But also, you have the ways in which Africa is being propped up as this… It is almost like a resource grab. One of the things I was reading was how countries, particularly the UAE, are buying up land in Africa, particularly in eastern Africa, to offset their climate. They buy up the land so they can actually offset their climate greenhouse gases, which makes zero sense. You also have people buying up data centres and African governments being like, ‘Oh, but we are cash-strapped. We need the cash. We haven’t had enough of an opportunity to pollute the environment.’ At the end of this, the average person you know—as you have so compellingly described at the centre of your research being this rural African woman—is not involved in any of these conversations but will feel these impacts disproportionately. I think it is crazy how we have gone from just pretending to care that there was this environmental impact or this environmental crisis that we all needed to be aware of. Now, it is almost like we are in free fall and there is a kind of race for resources that is ridiculous.

I wanted to talk a bit more about what you mentioned earlier; you mentioned labour and what the water bearers look like when it comes to the conversation about AI. I think it is interesting because there was that recent Time investigation that revealed how OpenAI had been using Kenyan workers to label violent and explicit contents. These workers were paid exploitative wages, at least that is what we know. They reported they were being paid exploitative wages, and they also experienced long-term trauma. It also reminds me of some of the reports that came out about Meta, about Facebook as well, in terms of content moderation, and how they were also exploiting Kenyan workers. As someone who is thinking about technology, who is Kenyan, based in Kenya, operating with Kenya as your centre, what was your reaction to some of these reports? How do you process what has been happening, especially the fact that it is happening in such proximity but also happening with such blatancy. And yet people are still described as invisible labour. Is it really invisible or hidden? How are you processing all of that?

NANJALA NYABOLA

For me, it is not inconsistent with the way Kenyan politicians behave. There is a very chilling thought that I have had when I look at this current administration, but really the last two administrations in Kenya, which I am also almost scared to verbalize it on the record, but I have a deeper understanding of how people sold other people to slavery, because it is not just in tech. One of these government’s explicit policies has been sending, young people, especially young women, to the Gulf to be domestic labour, despite the spike in deaths and murders. People get killed and their bodies get held with the Kafala system. When Israel began bombing Lebanon, they were, I don’t remember the exact number, a couple of hundred or thousand Kenyans who were stuck in Lebanon and their employers held on to their passports while they fled. And so, they yelled; these women couldn’t travel, and they were all huddled together in these centres. It was the same with Ethiopians. It was the same with Ugandans. They couldn’t get evacuated, so the bombs were falling and all they had was each other. There was one Kenyan woman whose employers locked her in the house for three weeks while Israel was bombing the town and left her in the house. Then they came back when the bombing ended; this was reported by the BBC.

This tells you there is a paradigm there. There is a paradigm in which politicians who are facing this generation of young people who are too educated for agriculture… and climate is also part of this narrative, because the climate means that there is less availability of arable land. So, agriculture is not really on the table as an option for the vast majority of young people, but there is this massive youth population and they are educated enough that they can’t really work the land the way their predecessors did, but there is not enough waged labour to absorb them. So, the solution is to mortgage them, sell them and their labour to other parts of the world. It is a model that is used in the Philippines. The Philippines is also another country that has had these issues with content moderation with AI moderators, as well as the low wages, mental health and all of that exploitation. It started in the Philippines and then when the Philippine government started to clamp down, they started to look at African countries whereby the standard of English was high enough that people could read what was being posted from all over the world in English. But then the job essentially meant that you were sitting in front of a computer for 12 to 18 hours a day looking at the worst of humanity.

The reason I call this invisible labour and the reason I am interested in this question is because these people get paid $2 a day, which feels like a lot of money in a developing country, but the value that they create for the AI companies is in the insane order of magnitude; it is in the trillions. When Microsoft launched Tay, which was their first AI chatbot, it was trained on social media. Tay had an X account and that one was not moderated. There was no cleanup that was happening on the other side. Tay became racist in two days (two days!) because the biggest chunk of what is on the internet is awful. We, those of us who are chronically online, sort of interact with about 10 to 30 per cent of what is on the internet. The 70 per cent is awful. It is child pornography, violence against women and people in general. The largest groups on Facebook in certain countries are all groups that are about violence against women. It is people documenting war atrocities, but not for human rights violation. It is for titillation.

Al Qaeda and ISIS all run internet accounts to publicize their atrocities. There is good, I am never going to say that there is no good on the internet. But there is also the fact that a huge chunk of what people put online is the worst version of themselves. And without content moderation and the intervention of human beings, all of these AI bots become terrible. This has been the consistent experience, even Gemini apparently recently went into a self-hate spiral. The question then becomes this: how do we value the labour that content moderators do? How do we see it? How do we value it when Open AI can become a trillion-dollar company without ever turning a profit? They have never, in their history, turned a profit. They have never sold a product that people said this is objectively useful, like a spoon or even a mobile phone, yet Sam Altman is a [billionaire]. Bill Gates is [a billionaire]. Elon Musk is [a billionaire]. Where is the exchange of labour happening? That is why it is invisible labour, but also that is why it is consistent with a context in which other kinds of violation are happening; it is capitalist exploitation in its rawest form.

For a lot of African societies, especially for young Africans, we are told, especially by those who wield power over us, that our only value to the capitalist chain is in our labour. It is not in our intellectual contributions. It is not in our ability to build things or in our ability to organize things. It is really in our ability to produce the grungiest labour without which there would be no value but is not valued enough in the capitalist system to be rewarded accordingly. It doesn’t surprise me. It is very consistent with what we see in this domestic labour context. One of the most exploitative industries in the world is shipping, mostly from South Asia, especially India and Bangladeshi people, and then when COVID hit and shipping got suspended, all these people were stuck on the ocean for years because the shipping companies take their passports and they were not allowing the ships to dock because people were afraid of COVID. These people didn’t have the right to disembark. But without them, there is no global trade. Do ordinary people who order things online, who ship things online, know that there is this exploited class of people who make shipping possible? They don’t. And these things are not unusual. It is very consistent with hyper-capitalism and the bigger history of capitalism. I think what is different this time is that the people who are being exploited are speaking up and saying that they no longer want to be a part of it. Hence the lawsuits and pushback that started with the Kenyan content moderators.

shop the republic

WALE LAWAL

During COVID, we saw who an essential worker was. What was also interesting about that time was that, back then, the argument was that now that we know that this is essential labour, will we reward it even more? But that never happened. It was a very interesting paradox where the pandemic forced us to understand what labour is actually essential. We understood that it was a labour that the world had been dismissive of and exploited. But then there was also this push that this would make us recalibrate our value system. And, of course, it didn’t. If anything, I find that much of this kind of just hunger around AI and some of the ways in which AI products and tools have been rolled out is directly linked to the sense of recklessness that came around the pandemic. What I mean is that the tech industry had this whole philosophy of move fast, break things but at least there was a time when you wouldn’t release a product that wasn’t good enough. Now people are releasing products that should be nowhere near the public, with this mindset that we will just fix it over time. I wonder what you make of that, and I think it is similar to what you said where you are releasing a mobile phone; that Nokia 3310 was perfect in all the functions, but now you are releasing ChatGPT. And ChatGPT is saying nonsense, half the time it is telling you the wrong thing and nobody is getting fined or sued.

NANJALA NYABOLA

Nobody is getting sued, nobody is being held accountable. It is part of this whole idea that the rules don’t apply to us, and so we can do whatever we want, because we make all this money. One of the things that has come out of my research that I want to put before government is how much of that money is actually accruing; how much of that money is actually taxable income that you can use to invest in other things; or how much of it is really just that you are creating value that they scrape off and repatriate to the mother country. Something that gets me is that it is not unprecedented. This is how mercantilism has always worked. We talk about the diamond trade. That is like the most recent historical analogy; African people were digging up all of these shiny rocks from the ground and being paid pennies on the hour and that all the value was being accrued by all of these companies that were transmitting that value to other parts of the world. And then it became war. It became blood diamonds. It became apparent that there was a mismatch between the value that was being accrued to these shiny rocks, then that was being accrued to the labour that was required to extract them from the ground. And then came the Kimberly Process. It is like we have done this before. We know that this is how mercantilism works. The reason why the tech companies can operate with these policy exemptions or legal exemptions is because they have managed to convince us of two things. One is that all of these are happening in the cloud. It doesn’t have materiality; it doesn’t have an impact on it. It is all good. Nothing bad is happening in the background. Secondly, they have managed to convince us is that the internet and the platforms that we use to connect to the internet are the same thing. And they are not. The internet as an infrastructure might be a public good, like pipe water and sewage, but the platforms that we use to connect to the internet are private companies and these private companies have significant impact on the natural environment and human society. That is why I interrogate this whole narrative of developmentalism that is embedded in the way we do tech because they are using the narrative of the internet as a public infrastructure to justify the amassing of these super profits by these private companies. The negative outcomes are public, and all of the profits are private, and that is something we have to challenge significantly.

When Google tells you we are going to build—or when Meta tells you we are going to build free basics and it is going to put the internet in everyone’s phone, and they say, well, that is good, because the internet is a public good. We take it a step further and we say, ‘Well, what version of the internet are the poor people of Ethiopia getting?’ It is one that is curated to sell advertising, to make money. It is one where AI is applied to extend the amount of time we spend on the internet at the expense of our private relationships and social interactions. When Meta says that they are going to build you, and Mark Zuckerberg said this on the record, ‘We are going to build you an AI assistant embedded in all of our applications.’, that means you don’t have to have real friends. What is the intent behind that? The intent behind that is that you sit at your computer talking to your fake friend who endorses every bad decision that you have ever made so that they can sell you more jeans, more sugar and more things. The end goal is always advertising, and I think that is why it is so important to do philosophical and narrative interrogation work.

You haven’t asked this yet, but this is really the impetus behind my research: I want Africans to lean into their lived experiences and ask the questions that make sense to them, and not just power to the questions that have emerged from Western academic, political and social practice. What are the questions that make sense to us? Water matters to us because most of us live in environments where we are paying council and government taxes, but we are also paying for private water. We are also paying for private security, and we are the ones who have to walk many kilometres in search of water. We live in big cities with the constant threat of electrical of blackouts. So electrical consumption is important to us. Can you imagine you have a generator in your house in Lagos, and you are thinking about rolling blackouts and then Meta opens a data centre, it means the little electricity that was coming is not coming anymore, and the cost of generating. In Nairobi, you can plan your day around blackouts, the electricity is going to be off on Tuesday from 9 a.m. to 5 p.m. In comes this big tech company, and now I have to pay more for less electricity. Cape Town had a water shortage for two years. Are these cities that can afford to adopt Western paradigms of AI without thinking about what it means for the local context? Thinking about the materiality that would be my sort of call to action for African researchers, philosophers and practitioners in this space. Stop copying what you know James Smith from Cupertino is doing. What does it mean for you sitting in Lagos, Nairobi, Johannesburg, Addis Ababa?

WALE LAWAL

That is a powerful question, and it is a very necessary question. It is a necessary one to ask because when it comes to these types of technologies, inevitably, Africa is looked at as the final frontier. It is looked at as the growth frontier. If we can get all Africans on board, the population alone, the number of people… I look at the fact that when I think ten years ago, it was very common and very popular to say things like, I think even Zuckerberg had said (he is always saying some interesting things.)… About ten years ago, he visited some African countries, and he went to a particular country, I can’t remember which one now, but he was like, they don’t have water, but they have Facebook. And he was so proud of that. I think about it now, that is a scary sight. It is a very scary thing to say in the sense that, are we going to get to a future where the comment now becomes, ‘They don’t have electricity, but they have ChatGPT’? People don’t interrogate further. What are they using Facebook for? Are they using it to victimize each other? Are they using it to harass each other? Have you created a platform that enables the worst version of ourselves, our worst instinct in societies where we don’t have the infrastructure to even regulate and govern ourselves?

NANJALA NYABOLA

It is really important to deal with the consequences. Holding that distinction allows us to say we are not anti-AI, we are not anti-social media, we are not anti-digital technologies. We are interrogating the paradigm in which they are being created and deployed. We are saying that we want deeper reflection because we don’t want to live in a world where Ethiopians are given free internet and it becomes almost like a driver of civil war and there are no consequences for that; whereby Kenyan content moderators can go crazy and their mental health is damaged because they are creating surplus value for an American company whereby all of these inequalities become embedded and deepened because we didn’t pay enough attention. When you present these criticisms, people often go, ‘well, you are anti-AI.’ We are not anti-AI. We want an approach that is true and consistent with the realities in which we exist, the world that we live in today and the world that we want to live in tomorrow. This current approach is not going to cut it.

For me, it is not going to cut it and simply adding Africans to it, saying diversity is not the same as decolonization. Decolonization is not a call for diversity. It is not a call for representation. It is a call for dismantling and interrogating power structures and inequalities by thinking beyond the present. What’s the impact on future generations? What’s the impact on the natural environment? The sum total of my research is to say, well, these are things that African women, African feminists, have been thinking about for years. I wanted to leave you with one of my favourite pieces of research that I have engaged with. It was about mining in South Africa. This South African researcher was thinking about how the domestic labour that women do in mining communities in South Africa, actually subsidizes mining companies because they are able to underpay the wages of the miners. She was studying the Marikana tragedy. She was observing how the platinum companies underpaid the mine workers, and the reason why they were still able to go back to work every day is because their wives and partners, the women who were in their homes, were economizing and finding different ways to supplement the income that made it possible for the men to continue to exist on these sub -optimal wages.

Basically, these super profits—platinum was, at one point, the hottest mineral in the world. Up to the Marikana disaster, it was the highest valuation that a platinum mine had received ever in history. This was being subsidized by women’s invisible labour, the domestic labour that is impossible to count. It made it possible for men to survive and subsist on the sub-optimal wages. I look at that paradigm, and I think, look at what’s happening in tech without the subsidizing of water bearers, all of these labourers who are underpaid and overworked. Sam Altman is a billionaire. Mark Zuckerberg is a billionaire. Bill Gates is a billionaire. And it is imperative that we move from diversity, and we move to decoloniality and start to ask these paradigm-shifting and foundational questions, otherwise, we end up replicating these patterns we have seen in mining, agriculture and shipping.

WALE LAWAL

I always like to leave the audience on a kind of, almost not necessarily optimistic, but on a positive note. You mentioned earlier that none of this is new regarding invisible labour. The difference now is that people are speaking up. When we even look at the investigation that we talked about earlier, OpenAI exploiting Kenyan workers; shortly after that, these workers organized unions to challenge exploitative contracts. What do you think that tells us about the future of tech labour, invisible labour, AI and technology more broadly on the continent. It is a powerful thing that this report came out and these workers have now just galvanized amongst themselves. Are you seeing similar trends? Is it a substantial movement? Is it a flash in the pan? I wanted to understand that a bit more based off what you have come across in your research.

NANJALA NYABOLA

A lot of Africans are so much smarter and more organized than we give ourselves credit for, because we have been through all of these cycles. There is so much work that we read, and we are so much more aware than I think our political leaders give us credit for, and that is signalled by the way in which all of these movements and pushbacks are happening. I think the biggest obstacle is our political class and the comforter class that is in bed with the capitalists in other parts of the world. And capitalist isn’t necessarily Western, because a lot of the Chinese tech companies are equally mercantilist and equally capitalist. The encouragement that I would give is that people are much more aware than we give them credit for and that they are willing to mobilize. There is a growingly vocal demand for a different tech future and the goal for the thinker is to put order to that impetus. And the goal for the political actor, for the person who has power, is to hear and to respond to that. The positive message I would leave for African thinkers, African theorists, people who are thinking about AI and people who are thinking about tech is to continue to be brave and stand firm in your truth. There is a reason why you feel uncomfortable. There is a reason why the incongruence between the dominant narrative and your experience is making you uncomfortable. Lean into that discomfort, articulate that discomfort. Feel free to interrogate the world around you based on your standpoint as an African person, it is valid. Just because it comes from a different place doesn’t make it less valid. I hope that at the end of it, my research is taken as an invitation for more African people to ask these foundational questions, to engage with the philosophical dimensions and foundational issues, because there are answers there, not just for Africa, but indeed for the whole world⎈

BUY THE MAGAZINE AND/OR THE COVER

-

‘The Empire Hacks Back’ by Olalekan Jeyifous by Olalekan Jeyifous

₦70,000.00 – ₦75,000.00Price range: ₦70,000.00 through ₦75,000.00 This product has multiple variants. The options may be chosen on the product page -

The Republic V9, N3 An African Manual for Debugging Empire

₦40,000.00

US$49.99